Use Tikhonov Regularization to Solve Fredholm Integral Equation

Background #

Fredholm integral equation of the first kind is written as,

The problem is to find , given that and are known. This equation occurs quite often in many different areas.

Discretization-based Method #

Here we describe a discretization-based method to solve the Fredholm integral equation. The integral equation is approximately replaced by a Riemann summation over grids,

where is the grid size along the dimension and , are the grid points with and indicating their indices. When grid size , the summation converges to the true integral. It is more convenient to write it in the matrix form,

where

and with being the identity matrix of dimension .

Now solving the Fredholm integral equation is equivalent to solving a system of linear equations. The standard approach ordinary least squares linear regression, which is to find the vector minimizing the norm . In principle, the Fredholm integral equation may have non-unique solutions, thus the corresponding linear equations are also ill-posed. The most commonly used method for ill-posed problem is Tikhonov regularization which is to minimize

Note that this is actually a subset of Tikhonov regularization (also called Ridge regularization) with being a parameter.

When is a probability density function #

In many cases, both and are probability density function (PDF), and is a conditional PDF, equivalent to . Thus, there are two constraints on the solution , that is and . These two constraints translate to for any and . Hence, we need to solve the Tikhonov regularization problem subject to these two constraints.

In the following, I will show how to solve the Tikhonov regularization problem with both equality and inequality constraints. First, I will show that the Tikhonov regularization problem with non-negative constraint can be easily translated to a regular non-negative least square problem (NNLS) which can be solved using active set algorithm.

Let us construct the matrix,

and the vector,

where is the identity matrix and is the zero vector of size . It is easy to show that the Tikhonov regularization problem subject to is equivalent to the regular NNLS problem,

Now we add the equality constraint, or written in matrix form. My implementation of solving such problem follows the algorithm described in Haskell and Hanson [1]. According to their method, the problem becomes another NNLS problem,

The solution to the above equation converges to the true solution when . Now I have described the algorithm to solve the Fredholm equation of the first kind when is a probability density function. I call the algorithm described above as non-negative Tikhonov regularization with equality constraint (NNETR).

Code #

Here I show the core code of the algorithm described above.

# core algorithm of non-negative equality Tikhonov regularization (NNETR)

def NNETR(K, f, Delta, epsilon, alpha):

# the first step

A_nn = np.vstack((K, alpha * np.identity(K.shape[1])))

b_nn = np.hstack((f, np.zeros(K.shape[1])))

# the second step

A_nne = np.vstack((epsilon * A_nn, np.full(A_nn.shape[1], 1.0)))

b_nne = np.hstack((epsilon * b_nn, 1.0))

# Use NNLS solver provided by scipy

sol, residue = scipy.optimize.nnls(A_nne, b_nne)

# solution should be divided by Delta (grid size)

sol = sol/Delta

return sol, residue

Examples #

- Compounding an exponential distribution with its rate parameter distributed according to a gamma distribution yields a Lomax distribution , supported on , with . is an exponential density and is a gamma density.

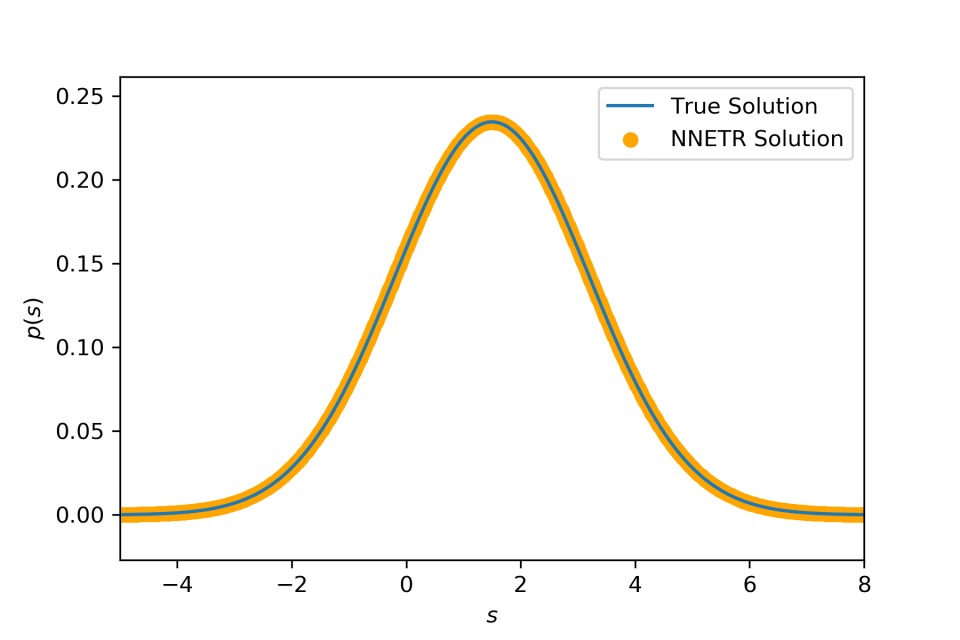

- Compounding a Gaussian distribution with mean distributed according to another Gaussian distribution yields (again) a Gaussian distribution . and

- Compounding an exponential distribution with its rate parameter distributed according to a mixture distribution of two gamma distributions. Similar to the first example, we use . But here we use where , and are parameters. It is clear that is a mixture between two different gamma distributions such as it is a bimodal distribution. Following the first example, we have where .

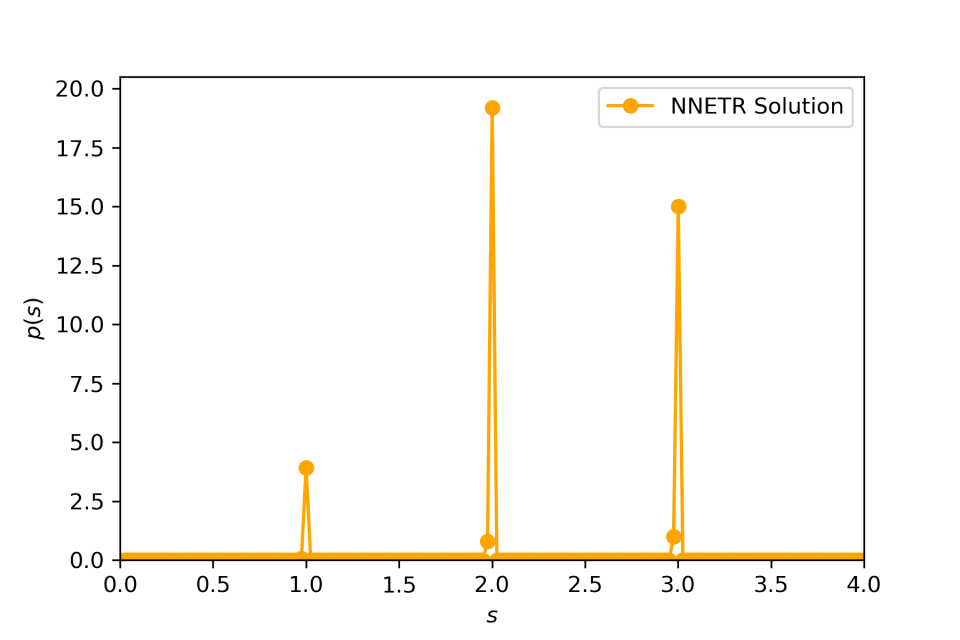

- Compounding an exponential distribution with its rate parameter distributed as a discrete distribution.

Haskell, Karen H., and Richard J. Hanson. “An algorithm for linear least squares problems with equality and nonnegativity constraints.” Mathematical Programming 21.1 (1981): 98 – 118. ↩︎